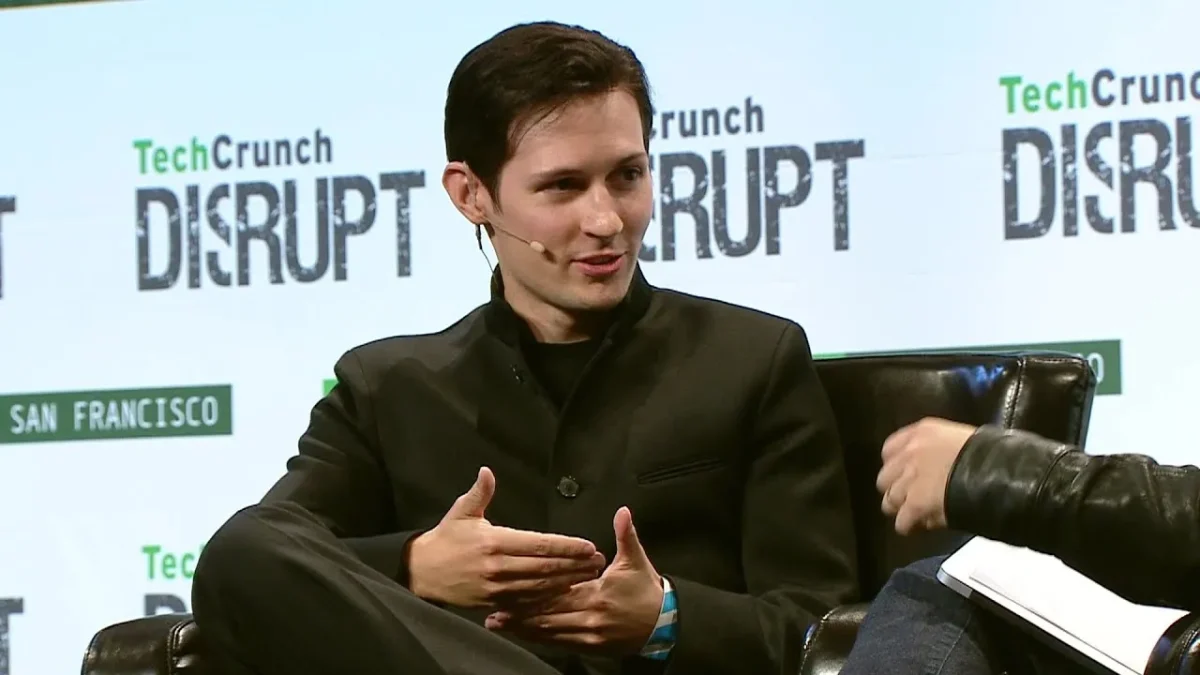

Earlier this month, news emerged across various French news channels that Telegram founder, Pavel Durov, had been arrested on French soil for charges of the spread of illicit material on the app.

Durov has been arrested on the accounts that his app was used to fuel organized crime in France. While he has been freed through bail, he may face a potential trial. For the rest of the world, the arrest itself was unprecedented. Owners of social media or messaging platforms are rarely, if ever, held accountable for the way their platforms are being used.

“In my opinion, this is the correct course of action to be taken,” Dale Fiess, environmental studies teacher and global news follower, said. “These large social media companies like Telegram, Instagram, Facebook, and others should be held accountable for what happens on their platform.”

Some argue that companies enable dangerous activity and practical harm by having illicit material on their platforms. Others claim that companies may overstep their boundaries and censor what they see fit, limiting genuine interactions and opening doors to biased judgments.

This has ignited the debate about freedom of speech, accountability, and the role of companies in content moderation. The debate highlights the necessity of further discourse on adequate government action. The majority recognizes that a balance must be met in a world of rapid technological development, where interactions online have become more prevalent than ever.